2.4 Experiments: Hunting for Causes

Researchers gain plenty of illuminating information from descriptive studies, but when they want to track down the causes of behavior, they rely heavily on the experimental method. An experiment allows a researcher to control and manipulate the situation being studied. Instead of being a passive recorder of behavior, the researcher actively does something that he or she believes will affect people's behavior and then observes what happens. These procedures allow the experimenter to draw conclusions about cause and effect—about what causes what. The basics of experimentation are reviewed in the video Scientific Research Methods.

Watch

Scientific Research Methods

Experimental Variables

Imagine that you are a psychologist whose research interest is multitasking. Almost everyone multitasks these days, and you would like to know whether that's a good thing or a bad thing. Specifically, you would like to know whether or not using a handheld cell phone while driving is dangerous, an important question because most people have done so. Talking on a cell phone while driving is associated with an increase in accidents, but maybe that's just for people who are risk takers or lousy drivers to begin with. To pin down cause and effect, you decide to do an experiment.

In a laboratory, you ask participants to “drive” using a computerized driving simulator equipped with an automatic transmission, steering wheel, gas pedal, and brake pedal. The object, you tell them, is to maximize the distance covered by driving on a busy highway while avoiding collisions with other cars. Some of the participants talk on the phone for 15 minutes to a research assistant in the next room about a topic that interests them; others just drive. You are going to compare how many collisions the two groups have. The basic design of this experiment is illustrated in Figure2.6, which you may want to refer to as you read the next few pages.

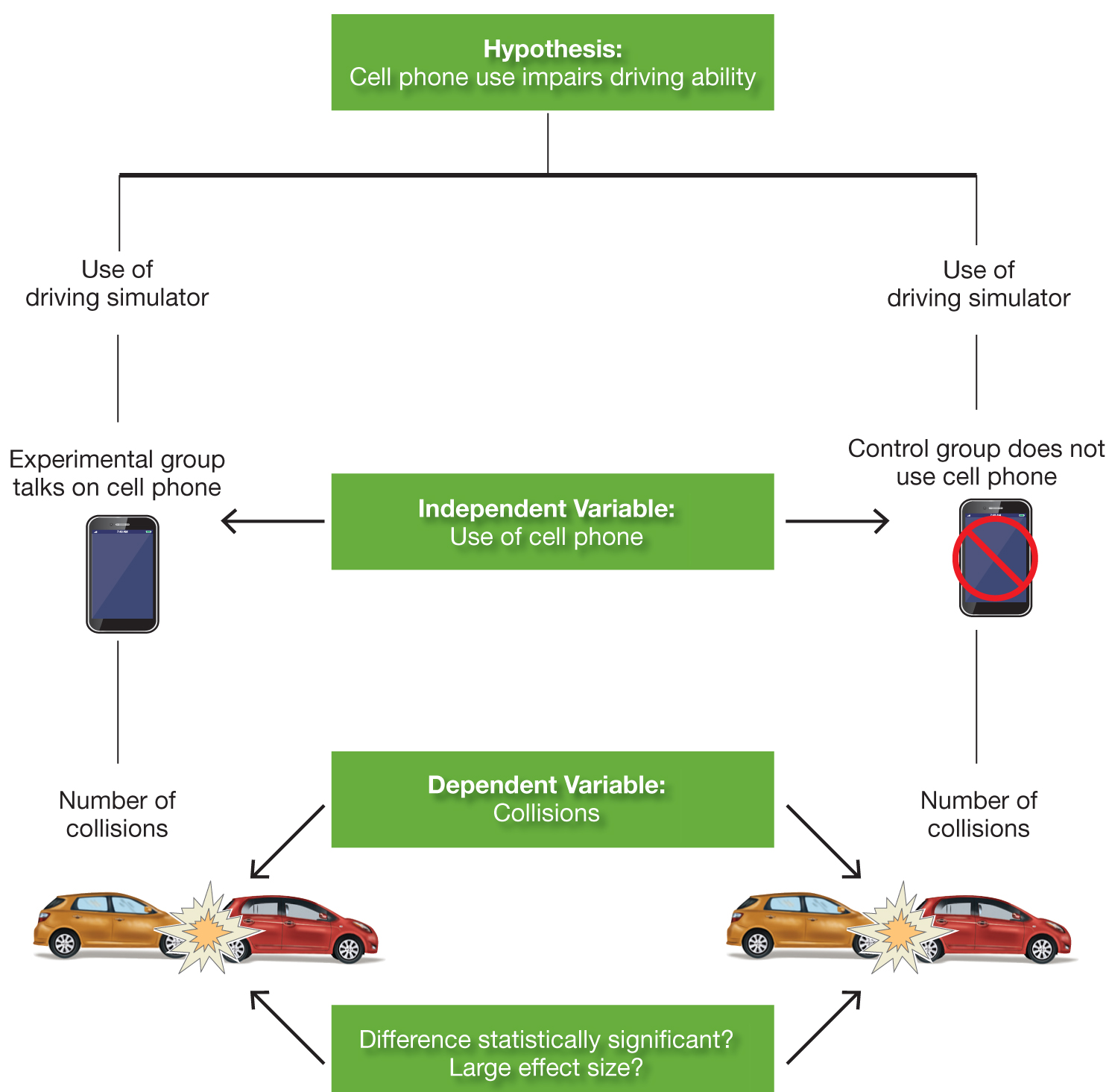

Figure2.6

Do Cell Phone Use and Driving Mix?

The text describes this experimental design to test the hypothesis that talking on a cell phone while driving impairs driving skills and leads to accidents.

The aspect of an experimental situation manipulated or varied by the researcher is known as the independent variable. The reaction of the participants—the behavior that the researcher tries to predict—is the dependent variable. Every experiment has at least one independent and one dependent variable. In our example, the independent variable is cell phone use (use vs. nonuse). The dependent variable is the number of collisions.

Variables in the Experimental Process

Ideally, everything in the experimental situation except the independent variable is held constant, that is, kept the same for all participants. You would not have those in one group use a stick shift and those in the other group drive an automatic, unless shift type were an independent variable. Similarly, you would not have people in one group go through the experiment alone and those in the other perform in front of an audience. Holding everything but the independent variable constant ensures that whatever happens is due to the researcher's manipulation and no other factors. It allows you to rule out other interpretations.

Understandably, students often have trouble keeping independent and dependent variables straight. You might think of it this way: The dependent variable—the outcome of the study—depends on the independent variable. When psychologists set up an experiment, they think, “If I do X, the people in my study will do Y.” The “X” represents the independent variable; the “Y” represents the dependent variable.

Most variables may be either independent or dependent, depending on what the experimenter wishes to find out. If you want to know whether eating chocolate makes people nervous, then the amount of chocolate eaten is the independent variable. If you want to know whether feeling nervous makes people eat chocolate, then the amount of chocolate eaten is the dependent variable.

Experimental and Control Conditions

Experiments usually require both an experimental condition and a comparison, or control condition. In the control condition, participants are treated exactly as they are in the experimental condition, except that they are not exposed to the same treatment or manipulation of the independent variable. Without a control condition, you cannot be sure that the behavior you are interested in would not have occurred anyway, even without your manipulation. In some studies, the same people can be used in both the control and the experimental conditions; they are said to serve as their own controls. In other studies, participants are assigned to either an experimental group or a control group.

In our cell phone study, we could have drivers serve as their own controls by having them drive once while using a cell phone and once without a phone. But for this illustration, we will use two different groups. Participants who talk on the phone while driving make up the experimental group, and those who just drive along silently make up the control group. We want these two groups to be roughly the same in terms of average driving skill. It would not do to start out with a bunch of reckless roadrunners in the experimental group and a bunch of tired tortoises in the control group. We also probably want the two groups to be similar in age, education, driving history, and other characteristics so that none of these variables will affect our results. One way to accomplish this is to use random assignment of people to one group or another, perhaps by randomly assigning them numbers and putting those with even numbers in one group and those with odd numbers in another. If we have enough participants in our study, individual characteristics that could possibly affect the results are likely to be roughly balanced in the two groups, so we can safely ignore them.

Sometimes researchers use different groups or conditions within their experiment. In our cell phone study, we might want to examine the effects of short versus long phone conversations, or conversations on different topics—say, work, personal matters, and very personal matters. In that case, we would have more than one experimental group to compare with the control group. In our hypothetical example, though, we just have one experimental group, and everyone in it will drive for 15 minutes while talking about whatever they wish.

This description does not cover all the procedures that psychological researchers use. In some kinds of studies, people in the control group get a placebo, a fake treatment or sugar pill that looks, tastes, or smells like the real treatment or medication, but is phony. If the placebo produces the same result as the real thing, the reason must be the participants' expectations rather than the treatment itself. Placebos are critical in testing new drugs because of the optimism that a potential “miracle cure” often brings with it. Medical placebos usually take the form of pills or injections that contain no active ingredients. (To see what placebos revealed in a study of Viagra for women's sexual problems, see Figure2.7.)

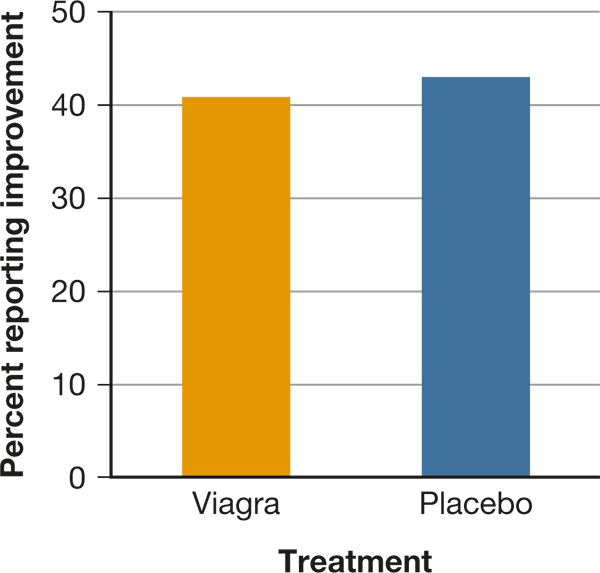

Figure2.7

Does Viagra Work for Women?

Placebos are essential to determine whether people taking a new drug improve because of the drug or because of their expectations about it. In one study, 41 percent of women taking Viagra said their sex lives had improved. That sounds impressive, but 43 percent taking a placebo pill also said their sex lives had improved (Basson et al., 2002).

Control groups, by the way, are also crucial in many nonexperimental studies. For example, some psychotherapists have published books arguing that girls develop problems with self-esteem and confidence as soon as they hit adolescence. But unless the writers have also tested or surveyed a comparable group of teenage boys, we cannot know whether low self-esteem is a problem unique to girls or is just as typical for boys.

Experimenter Effects

Because their expectations can influence the results of a study, participants should not know whether they are in an experimental or a control group. When this is so (as it usually is), the experiment is said to be a single-blind study. But participants are not the only ones who bring expectations to the laboratory; so do researchers. And researchers' expectations, biases, and hopes for a particular result may cause them to inadvertently influence the participants' responses through facial expressions, posture, tone of voice, or some other cue.

Many years ago, Robert Rosenthal (1966) demonstrated how powerful such experimenter effects can be. He had students teach rats to run a maze. Half of the students were told that their rats had been bred to be “maze bright,” and half were told that their rats had been bred to be “maze dull.” In reality, there were no genetic differences between the two groups of rats, yet the supposedly brainy rats actually did learn the maze more quickly, apparently because of the way the students were handling and treating them. If an experimenter's expectations can affect a rodent's behavior, reasoned Rosenthal, surely they can affect a human being's behavior, and he went on to demonstrate this point in many other studies (Rosenthal, 1994). Even an experimenter's friendly smile or cold demeanor can affect people's responses.

One solution to the problem of experimenter effects is to do a double-blind study. In such a study, the person running the experiment, the one having actual contact with the participants, also does not know who is in which group until the data have been gathered. Double-blind procedures are essential in drug research. Different doses of a drug (and whether it is the active drug or a placebo) are coded in some way, and the person administering the drug is kept in the dark about the code's meaning until after the experiment. To run our cell phone study in a double-blind fashion, we could use a simulator that automatically records collisions and have the experimenter give instructions through an intercom so he or she will not know which group a participant is in until after the results are tallied.

Think back now to the opening story on the alleged benefits of video games. Most of the studies that reported such benefits suffered from basic mistakes of experimental design. The experimental and control groups were not comparable: Often, the cognitive performances of expert gamers were compared with those of nongamers, who had no experience. The studies were not blind: Players knew they were chosen to participate precisely because they were expert gamers, an awareness that could have influenced their performance and motivation to do well. And the researchers knew which participants were in the experimental and control groups, knowledge that might have affected the participants' performance (Boot, Blakely, & Simons, 2011).

Because experiments allow conclusions about cause and effect, and because they permit researchers to distinguish real effects from placebo effects, they have long been the method of choice in psychology. However, like all methods, the experiment has its limitations. Just as in other kinds of studies, the participants are typically college students and may not always be representative of the larger population. Moreover, in an experiment, the researcher designs and sets up what is often a rather artificial situation, and the participants try to do as they are told. For this reason, many psychologists have called for more field research, the careful study of behavior in natural contexts such as schools and the workplace (Cialdini, 2009). Suppose you want to know whether women are more “talkative” than men. If you just ask people, most will say “sure women are!,” as the stereotype suggests. A field study would be the best way to answer this question, and indeed such a study has been done. The participants wore an unobtrusive recording device as they went about their normal lives, and the researchers found no gender differences at all (Mehl et al., 2007).

Every research method has both its strengths and its weaknesses. Did you make a list of each method's advantages and disadvantages, as we suggested earlier? If so, compare it now with the one in Review2.1.