6.3

Hearing

Like vision, the sense of hearing, or audition, provides a vital link with the world around us. Because social relationships rely so heavily on hearing, when people lose their hearing they sometimes come to feel socially isolated. That is why many people with a hearing impairment feel strongly about teaching deaf children American Sign Language (ASL) or other gestural systems, which allow them to communicate with other signers.

What We Hear

The stimulus for sound is a wave of pressure created when an object vibrates (or when compressed air is released, as in a pipe organ). The vibration (or release of air) causes molecules in a transmitting substance to move together and apart. This movement produces variations in pressure that radiate in all directions. The transmitting substance is usually air, but sound waves can also travel through water and solids, as you know if you have ever put your ear to the wall to hear voices in the next room.

As with vision, physical characteristics of the stimulus—in this case, a sound wave—are related in a predictable way to psychological aspects of our experience:

Loudness is the psychological dimension of auditory experience related to the intensity of a wave's pressure. Intensity corresponds to the amplitude (maximum height) of the wave. The more energy contained in the wave, the higher it is at its peak. Perceived loudness is also affected by how high or low a sound is. If low and high sounds produce waves with equal amplitudes, the low sound may seem quieter.

Sound intensity is measured in units called decibels (dB). A decibel is one-tenth of a bel, a unit named for Alexander Graham Bell, the inventor of the telephone. The average absolute threshold of hearing in human beings is zero decibels. Unlike inches on a rule, decibels are not equally distant; each 10 decibels denotes a 10-fold increase in sound intensity. On the Internet, decibel estimates for various sounds vary a lot from site to site; this is because the intensity of a sound depends on things like how far away it is and the particular person or object producing the sound. The important thing to know is that a 60-decibel conversation is not twice as loud as a 30-decibel whisper; it is 1,000 times louder.

Pitch is the dimension of auditory experience related to the frequency of the sound wave and, to some extent, its intensity. Frequency refers to how rapidly the air (or other medium) vibrates—the number of times per second the wave cycles through a peak and a low point. One cycle per second is known as 1 hertz (Hz). The healthy ear of a young person normally detects frequencies in the range of 16 Hz (the lowest note on a pipe organ) to 20,000 Hz (the scraping of a grasshopper's legs).

Timbre is the distinguishing quality of a sound. It is the dimension of auditory experience related to the complexity of the sound wave, the relative breadth of the range of frequencies that make up the wave. A pure tone consists of only one frequency, but pure tones in nature are extremely rare. Usually what we hear is a complex wave consisting of several subwaves with different frequencies. Timbre is what makes a note played on a flute, which produces relatively pure tones, sound different from the same note played on an oboe, which produces complex sounds.

When many sound-wave frequencies are present but are not in harmony, we hear noise. When all the frequencies of the sound spectrum occur, they produce a hissing sound called white noise. Just as white light includes all wavelengths of the visible light spectrum, so does white noise include all frequencies of the audible sound spectrum. The video Perceptual Magic in Art 2 will show you more about the complexity of sound.

Watch

Perceptual Magic in Art 2

An Ear on the World

As Figure6.13 shows, the ear has an outer, a middle, and an inner section. The soft, funnel-shaped outer ear is well designed to collect sound waves, but hearing would still be pretty good without it. The essential parts of the ear are hidden from view, inside the head.

Figure6.13

Major Structures of the Ear

Sound waves collected by the outer ear are channeled down the auditory canal, causing the eardrum to vibrate. These vibrations are then passed along to the tiny bones of the middle ear. Movement of these bones intensifies the force of the vibrations separating the middle and inner ear. The receptor cells for hearing (hair cells), located in the organ of Corti (not shown) within the snail-shaped cochlea, initiate nerve impulses that travel along the auditory nerve to the brain.

A sound wave passes into the outer ear and through an inch-long canal to strike an oval-shaped membrane called the eardrum. The eardrum is so sensitive that it can respond to the movement of a single molecule! A sound wave causes it to vibrate with the same frequency and amplitude as the wave itself. This vibration is passed along to three tiny bones in the middle ear, the smallest bones in the human body. These bones, known informally as the hammer, the anvil, and the stirrup, move one after the other, which has the effect of intensifying the force of the vibration. The innermost bone, the stirrup, pushes on a membrane that opens into the inner ear.

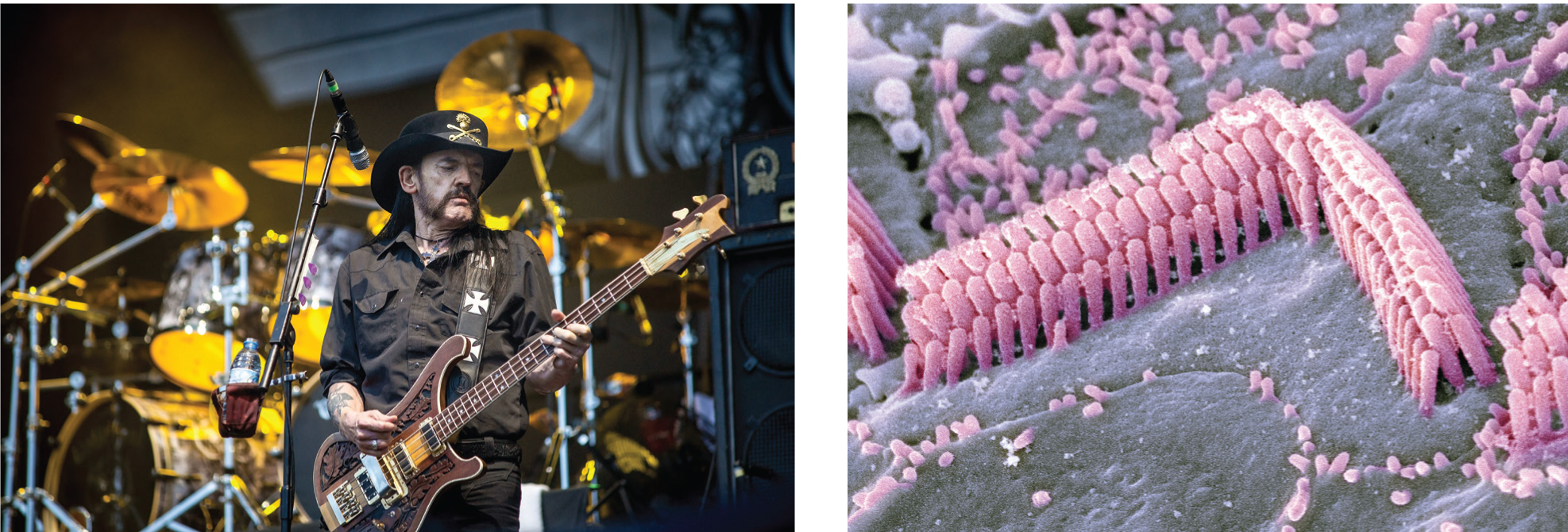

The actual organ of hearing, the organ of Corti, is a chamber inside the cochlea, a snail-shaped structure within the inner ear. The organ of Corti plays the same role in hearing that the retina plays in vision. It contains the all-important receptor cells, which in this case are called hair cells and are topped by tiny bristles, or cilia. Brief exposure to extremely loud noises, such as those from a gunshot or a jet airplane (140 dB), or sustained exposure to more moderate noises, such as those from shop tools or truck traffic (90 dB), can damage these fragile cells. The cilia flop over like broken blades of grass, and if the damage affects a critical number, hearing loss occurs. In modern societies, with their rock concerts, deafening bars and clubs, leaf blowers, jackhammers, and music players turned up to full blast, such damage is increasingly common, even among teenagers and young adults (Agrawal, Platz, & Niparko, 2008). Scientists are looking for ways to grow new, normally functioning hair cells, but hair-cell damage is currently irreversible (Liu et al., 2014).

If prolonged, the 120-decibel music at a rock concert can damage or destroy the delicate hair cells of the inner ear and impair the hearing of fans that are sitting or standing close to the speakers. The microphotograph on the right shows minuscule bristles (cilia) projecting from a single hair cell.

The hair cells of the cochlea are embedded in the rubbery basilar membrane, which stretches across the interior of the cochlea. When pressure reaches the cochlea, it causes wavelike motions in fluid within the cochlea's interior. These waves of fluid push on the basilar membrane, causing it to move in a wavelike fashion, too. Just above the hair cells is yet another membrane. As the hair cells rise and fall, their tips brush against it, and they bend. This causes the hair cells to initiate a signal that is passed along to the auditory nerve, which then carries the message to the brain. The particular pattern of hair-cell movement is affected by the manner in which the basilar membrane moves. This pattern determines which neurons fire and how rapidly they fire, and the resulting code in turn helps determine the sort of sound we hear. We discriminate high-pitched sounds largely on the basis of where activity occurs along the basilar membrane; activity at different sites leads to different neural codes. We discriminate low-pitched sounds largely on the basis of the frequency of the basilar membrane's vibration; again, different frequencies lead to different neural codes.

Could anyone ever imagine such a complex and odd arrangement of bristles, fluids, and snail shells if it did not already exist?

Constructing the Auditory World

Just as we do not see a retinal image, we also do not hear a chorus of brushlike tufts bending and swaying in the dark recesses of the cochlea. Instead, we use our perceptual powers to organize patterns of sound and to construct a meaningful auditory world.

In your psychology class, your instructor hopes you will perceive his or her voice as figure and distant cheers from the athletic field as ground. Whether these hopes are realized will depend, of course, on where you choose to direct your attention. Other Gestalt principles also seem to apply to hearing. The proximity of notes in a melody tells you which notes go together to form phrases; continuity helps you follow a melody on one violin when another violin is playing a different melody; similarity in timbre and pitch helps you pick out the soprano voices in a chorus and hear them as a unit; closure helps you understand a cell phone caller's words even when interference makes some of the individual sounds unintelligible.

Besides needing to organize sounds, we also need to know where they are coming from. We can estimate the distance of a sound's source by using loudness as a cue: We know that a train sounds louder when it is 20 yards away than when it is a mile off. To locate the direction a sound is coming from, we depend in part on the fact that we have two ears. A sound arriving from the right reaches the right ear a fraction of a second sooner than it reaches the left ear, and vice versa. The sound may also provide a bit more energy to the right ear (depending on its frequency) because it has to get around the head to reach the left ear. It is hard to localize sounds that are coming from directly in back of you or from directly above your head because such sounds reach both ears at the same time. When you turn or cock your head, you are actively trying to overcome this problem. Horses, dogs, rabbits, deer, and many other animals do not need to do this because the lucky creatures can move their ears independently of their heads.

A few blind people have learned to harness the relationship between distance and sound to navigate their environment in astonishing ways—hiking, mountain biking, even golfing. They use their mouths to make clicking sounds and listen to the tiny echoes bouncing off objects, a process called echolocation, which is similar to what bats do when they fly around hunting for food. In blind human echolocators, the visual cortex responds to sounds that produce echoes—that is, sounds with information about the size and location of objects—but not to other sounds without echoes (Thaler, Arnott, & Goodale, 2011).